December 22, 2025

XAI: Transparency and Power of White Box Models

Explainable AI (XAI): The Demand for Explanation in High Stakes Decisions

In school, whether it's high school or college, we all remember the math teacher who insisted on seeing the step-by-step process for every answer. Write the answer only and you’d likely get a bad grade. While this rule might have felt bothersome, there is a legitimate concern behind: How can the teacher confirm you didn't cheat? How can they verify you truly understand the material? You might even arrive at the correct answer purely by chance, without grasping the concepts.

Both lenders and borrowers face the same critical question regarding automated credit scoring models: How was the final decision reached? This crucial need for transparency is why Explainable AI (XAI) has become a regulatory requirement for automated processes.

While automated credit scoring models are fundamentally a math problem, the stakes are much higher than a bad grade. For the lender, it involves the investment of substantial capital, often hundreds of thousands of dollars. For the borrower, the decision represents a critical opportunity to secure the necessary funds to launch or expand a business, impacting not only the individual but potentially the livelihoods of employees and their families. The pressure is high and the risk is critical.

1. The Hidden Risk: Why Black Box Models Are Unpredictable

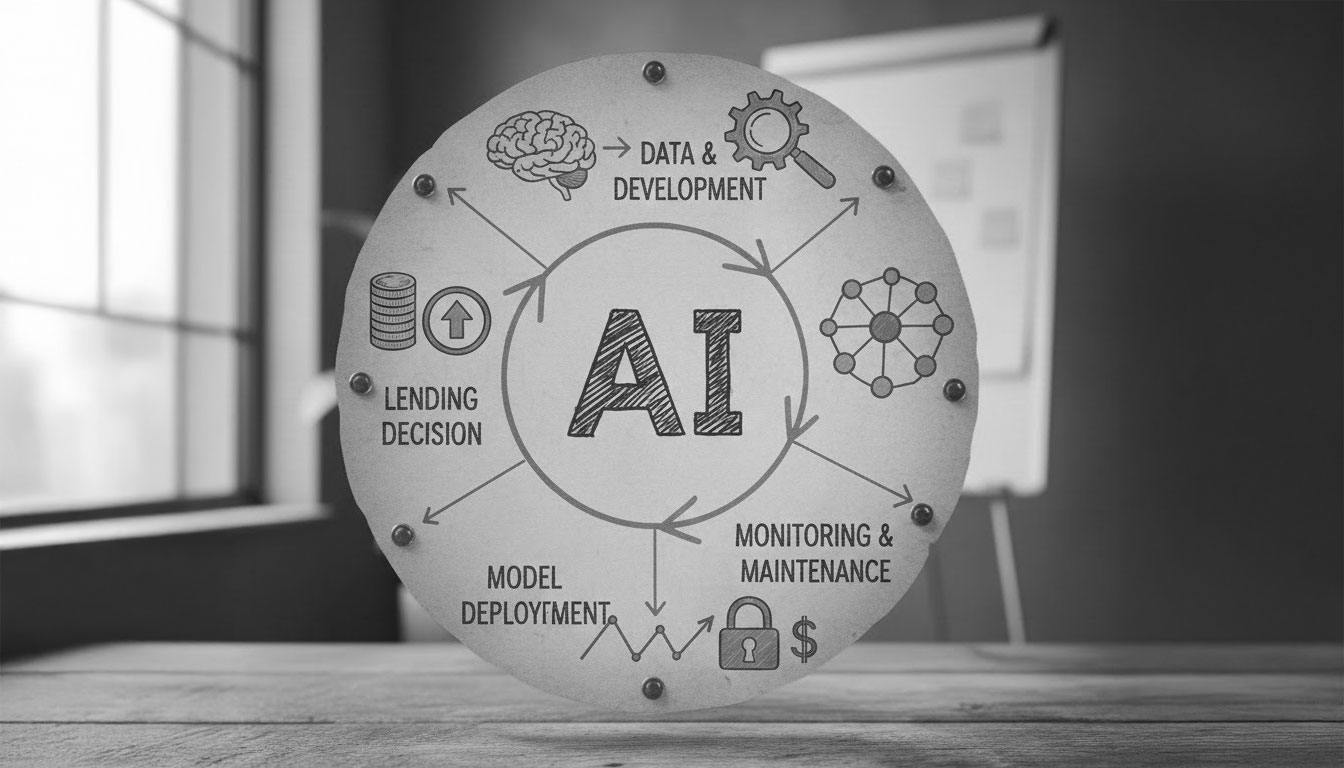

The recent surge in Artificial Intelligence (AI) has demonstrated unprecedented power, allowing Large Language Models (LLMs) to discover previously unseen patterns. However, their lack of traceability presents an insurmountable obstacle in environments where trust and regulation are mandatory.

1.1. How Does Opacity Occur in Black Box Models?

We can imagine advanced AI models as an artificial brain: millions of "neurons" communicating simultaneously across thousands of layers, exchanging information and feedback to generate an output. This complex system, leveraging millions of parameters, is the source of AI's power, but also its great vulnerability.

Although programmers design the AI's structure and curate its training data, once the model is operational, the combination of features it utilizes and the weight it assigns to those factors becomes impossible to fully trace or explain.

The term "Black Box Models" originates precisely from this opacity. We provide the input (customer data) and receive the output (the score), but the internal reasoning process of the "why" remains inaccessible.

1.2. The Apple Card Case: When Bias Hides in AI

Kin Analytics warns that Black Box Models are not fully dependable for automated credit decisions in high-risk environments.

The Apple Card case in 2019 is a prime example. The card, which used an AI driven credit underwriting system, led to complaints about significant differences in credit limits between men and women with comparable financial profiles.

Even if the issuer had not introduced gender as a direct variable, other factors can indirectly lead to biased decisions. The model's pattern recognition, processing large historical datasets, can:

- Amplify Historical Bias: Historical data often reflects past unfairness (e.g., wage gaps), which the model simply learns and replicates.

- Introduce Intersectional Bias: The model might identify patterns based on a combination of correlated variables (e.g., purchasing history at a specific store correlated with a demographic group), perpetuating marginalization without direct intent.

1.3. Why Black Box Models are Untenable in B2B Credit

In the context of capital investment and equipment leasing, the disadvantages of opaque models are unacceptable:

- Increased Processing Times: Credit analysts cannot quickly review the causes of a decision. This mandates extensive manual review of applications, eliminating the efficiency sought through automation.

- Reputational and Ethical Risk: A potentially biased output cannot be detected or corrected if the pattern that generated it cannot be traced, exposing the company to severe reputational and legal risks.

- Regulatory Non Compliance: The model becomes indefensible during audits. The SR 11-7: Guidance on Model Risk Management from the U.S. Federal Reserve requires validation and auditability of models, which is impossible when the internal process is a Black Box.

2. The Solution is Transparency: The Foundation of Explainable AI (XAI)

In contrast to the opacity of Black Box Models, Explainable AI (XAI) forms the core of Kin Analytics' solutions.

The European Data Protection Supervisor provides a clear definition that guides our approach:

"Explainable Artificial Intelligence (XAI) is the ability of AI systems to provide clear and understandable explanations for their actions and decisions... by elucidating the underlying mechanisms of their decision making processes."

Kin Analytics translates this into a core business requirement: we need the ability to explain the business logic behind the use of features, not just point to the factors that influenced the score. We look for patterns that not only make statistical sense but also possess justifiable business rationale.

2.1. The Three Technical Demands of Explainability

For a system to be considered XAI, Kin Analytics ensures compliance with these three characteristics, which are crucial for credit risk management:

2.2. Safe and Sound: The Competitive Edge of White Box Models

For successful XAI integration, the most robust solution is the Self Interpretable Model or White Box Model.

These models, such as decision trees and linear regression, allow analysts to trace how input translates into output. Their interpretability is integrated into the system's core, facilitating the clear identification and tracking of the most influential features.

At Kin Analytics, we rely on White Box Models because they offer decisive advantages:

- Total Interpretation: They allow for a complete understanding of all variables and how they are used to reach a final decision.

- Business Control: Feature weights are designed as a combination of data-driven processes and strategic business considerations. The machine suggests; the expert controls.

- Complete Traceability: They offer complete traceability of the scoring process, from input data to the final score.

- Efficient Human Review: They allow the analyst to conduct a human review focused only on the input variables flagged as critical by the model, drastically shortening manual processing times.

- Transparent Customer Justification: Automated decisions offer clear, specific explanations to customers, enhancing user experience and reputation.

- Inherent Regulatory Compliance: By their transparent nature, these models are intrinsically compliant with regulatory demands, providing a justifiable basis for every outcome.

When White Box Models are employed alongside the correct development process balanced with business needs a powerful tool emerges. This tool offers exceptional decision making results while being inherently justifiable and fully compliant.

.webp)