February 2, 2026

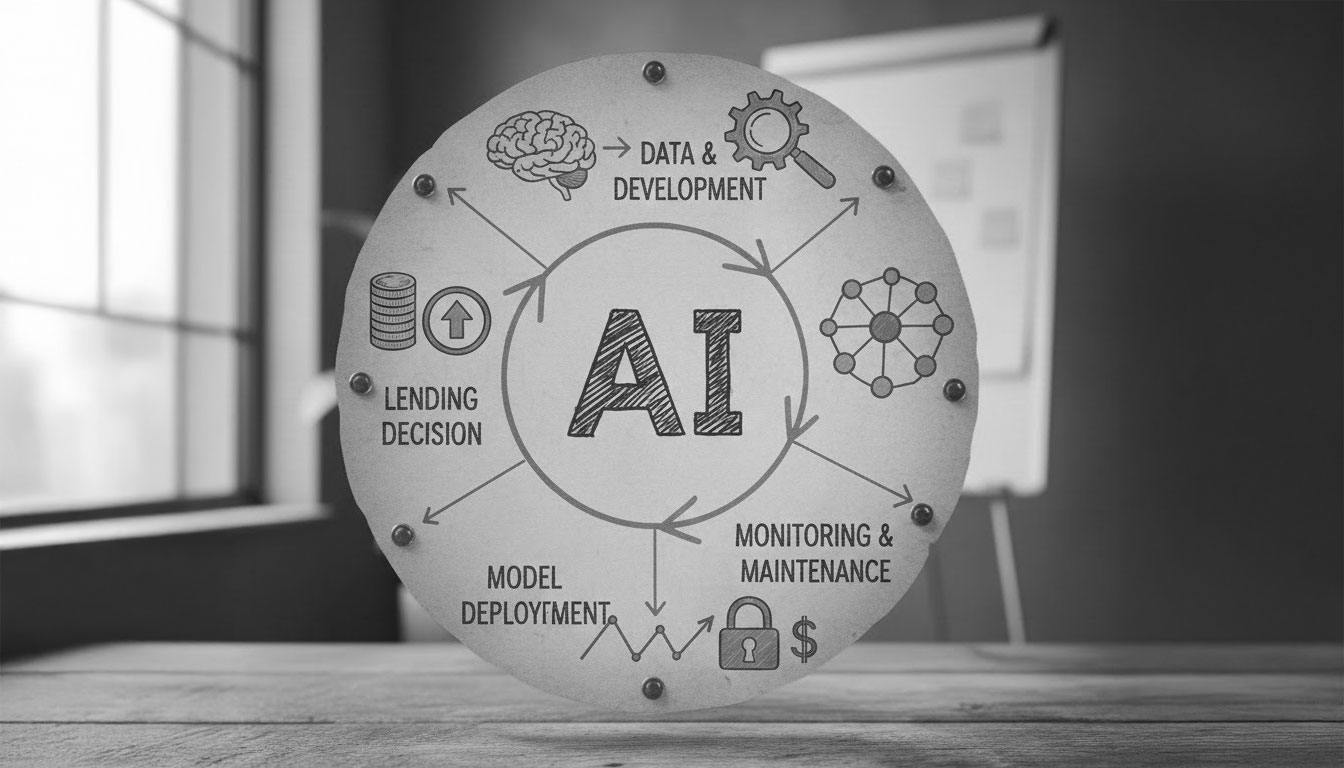

6 Critical AI Lifecycle Stages in Lending: Why Models Decay

AI Lifecycle in Lending: Why Models Decay in Silence

Imagine you’ve spent months building the "perfect" decision engine. On day one, it’s a masterpiece. It’s fast, precise, and your team is celebrating. But here’s the uncomfortable truth: from that moment, your model starts to become less effective. It doesn’t happen with a loud crash or an error message. It happens quietly. In finance, this "silent decay" shows why a disciplined AI lifecycle isn't just a technical choice; it’s crucial for survival.

At Kin Analytics, we’ve observed this in banks and fintechs alike. A model works perfectly for a quarter, and then, almost unnoticed, it begins to approve risky loans or reject good customers. If you aren't actively managing the AI model lifecycle, you’re not just losing accuracy; you’re losing money.

This guide is for leaders who want to stop the decline. We’re outlining the six stages of AI lifecycle management that keep your models sharp, lessen risk, and boost your underwriters' confidence.

What Exactly is the AI Lifecycle in Lending?

To keep it simple, think of the AI lifecycle as the "biography" of your credit model. It covers everything from the moment the first line of code is written to the day the model is finally decommissioned.

In lending, this isn't a straight path. It’s a never-ending cycle. Unlike traditional software, which usually continues to follow your initial instructions indefinitely, AI makes educated guesses based on past data. If the surrounding environment changes, those guesses become less accurate.

Why It’s a Circle, Not a Line

Once you put a model into "production," meaning it’s actually making decisions, the clock starts ticking. You have to monitor it, adjust it, teach it new things, and sometimes start from scratch. This is the essence of AI lifecycle management. If you treat a model like a "set and forget" tool, you’re allowing a 2022 brain to try to navigate a 2026 economy. It simply won’t work.

The Silent Killer: Data vs. Model Drift

Why do smart models become "dumb"? It often comes down to model drift detection. Even the best algorithms can lose touch with reality. We typically see this happen in two ways:

1. Data Drift (When the "Who" Changes)

This occurs when the people asking for loans today are different from those you trained the model on.

- The Scenario: You trained your model on suburban homeowners with 10-year job histories.

- The Reality: Your marketing team launches a campaign targeting gig-economy freelancers in their 20s.

- The Result: Your model now faces a "new" world it doesn’t recognize. This is Data Drift.

2. Model Drift (When the "Why" Changes)

This is much more serious because the data might look the same, but its meaning has shifted.

- The Scenario: Inflation rises or a major local industry collapses.

- The Reality: A borrower’s debt level that seemed "safe" last year is now a huge red flag because living costs doubled.

- The Result: The link between the data and the outcome has been severed.

In lending, the world changes faster than your code. Catching these shifts is the whole point of a healthy AI lifecycle.

The 6 Stages of a Resilient AI Lifecycle

At Kin Analytics, we don't just build models; we help you operate them. Here are the six pillars we use to keep AI healthy and profitable.

1. Train: Building for "Battle," Not the Lab

Every AI lifecycle starts with a goal. Are you looking to approve more loans or reduce your loss rate? You can't always do both. Before writing any code, we examine the data.

- Cleanliness: If you feed in "garbage," you get "garbage" out.

- Fairness: We check for any biases that might cause the model to treat people unfairly. This is a necessary part of a Responsible AI lifecycle.

- The Anchor: We define success metrics that the business understands, like the Gini coefficient or the AUC-ROC. This strategic anchor keeps the AI lifecycle in lending grounded in real risk, not just laboratory theory.

2. Deploy: The Model Meets Reality

Once the model is ready, it’s time for deployment and monitoring. This is when the model leaves the digital folder and enters the real world where underwriters use it.

We always recommend a "Shadow Mode" during this stage. The model makes "ghost decisions" in the background without affecting real customers. We then compare those decisions to what your human experts choose. It’s the best way to build trust before giving full control to the machine.

3. Monitor: Watching the Heartbeat

Once the model is in use, it needs constant monitoring. This is a crucial part of the MLOps lifecycle.

We provide dashboards that track more than just "is it working?" We monitor:

- Decision Concordance: How often do your human underwriters disagree with the AI? If they override the model 30% of the time, you have a trust or decay problem.

- Population Stability Index (PSI): This serves as our "smoke detector" for drift. If this number changes, the world has shifted.

4. Calibration: The Low-Cost "Tune-Up"

Sometimes a model isn't "broken"; it just needs a minor adjustment. This is calibration.

Imagine your model still knows who is a "good" or "bad" borrower, but due to a change in interest rates, actual default rates have shifted across the board. Instead of building a new model, you just adjust how scores translate into probabilities. It’s quick, inexpensive, and a vital step in the AI model lifecycle.

5. Retrain: When It’s Time to Go Back to School

If a simple adjustment doesn’t work, you need to retrain the AI model. This is "major surgery". You take the model back to the lab and provide it with the most recent 6 to 12 months of data.

You should initiate this when:

- The model’s accuracy falls below your set thresholds.

- Your business strategy changes (e.g., you launch a new product).

- Regulators require it (many banks do this annually to meet AI governance rules like SR 11-7).

6. Retire: Knowing When to Say Goodbye

Every model has an expiration date. Retiring a model is a matter of financial hygiene, not failure. If a product line ends or better technology emerges, shut the old one down. "Zombie models" pose operational risks you don’t need.

Why AI Lifecycle Management Matters for Your Bottom Line

If you’re a CRO or a CEO, this isn't just a "tech thing"; it’s a "money thing". Managing the AI lifecycle brings three significant benefits:

- Lower Losses: Catching drift 90 days earlier can save millions in bad debt.

- Higher Efficiency: When underwriters trust the model, they spend less time on manual reviews and more on high-value strategy.

- Audit Peace of Mind: When a regulator asks, "Why did you reject these people?", you have a documented, Responsible AI lifecycle that shows your decisions were fair and based on data.

MLOps and Responsible AI: Breaking the Jargon

You'll hear these terms a lot. Let’s clarify them:

- MLOps lifecycle: This is just the "plumbing" that keeps the AI running smoothly. It’s the team and tools ensuring monitoring happens every day without manual checks.

- Responsible AI lifecycle: This is the "conscience" of your model. It ensures your AI doesn't inadvertently discriminate based on residence or age.

Both are vital. Without MLOps, your model fails. Without Responsible AI, your business faces legal and ethical risks.

Conclusion: Treat Your AI Like a Living Asset

The AI lifecycle in lending goes beyond just smart math. It’s about caring for a tool that helps your business grow. Models are created with a purpose, but they only remain effective if you help them adapt to a changing world.

If you want to modernize your credit decisioning, remember:

- Start with a clear goal.

- Examine your data for bias.

- Monitor adoption, not just accuracy.

At Kin Analytics, we believe the true value of AI emerges after the "Go-Live" button is pushed. Through disciplined AI lifecycle management, you can reduce risk and create a credit program ready for whatever the economy throws at it next.

Is your decision engine starting to feel outdated? Contact Kin Analytics today. Let’s check the heartbeat of your models and ensure your AI lifecycle is prepared for the long haul.

FAQ: Key Questions about the AI Lifecycle

1. What is the AI lifecycle in finance?

It is the end-to-end process of building, deploying, monitoring, maintaining, and retiring AI models used in financial decisioning, such as credit approvals, pricing, and risk assessment.

2. Why does model degradation occur?

Model degradation mainly occurs due to model drift, when economic relationships change, and data drift, when the profile of borrowers or loan applications evolves over time.

3. What does an MLOps team do in the AI lifecycle?

An MLOps team automates model monitoring, drift detection, deployment pipelines, and retraining processes to ensure AI systems remain reliable and scalable in production.

4. Is it mandatory to retire a model after a fixed period?

There is no fixed expiration date, but AI governance frameworks recommend periodic reviews, often annually. When a model no longer reflects business or market reality, retirement becomes the most responsible option.

.webp)